3-D FDTD code with CRBC/DAB Boundary Conditions using MPI¶

This tutorial explains the code contained primarily in the files yee_mpi.cpp, yee_mpi.hpp, and yee_mpi_example.cpp.

Warning!

This code is meant to serve as an example of how the CRBC/DAB library might be used in conjunction with MPI. We are not officially supporting MPI at this time, but we will try to answer related questions if we are able.

This code has also experienced unexplained deadlock/stalling issues in testing. We believe that this may have been caused by memory and network problems, but have not been able to verify this theory. If you experience issues or find a bug, please contact John LaGrone at "jlagrone at smu dot edu".

Introduction¶

This code implements the finite difference time-domain solution of Maxwell’s equations (curl formulation) over a 3-D Cartesian lattice. The grid is terminated using Double Absorbing Boundaries (DAB).

Before beginning, it would be a good idea to understand the serial implementation of the 3D Yee Scheme. The C++ wave equation example may also be helpful in understanding how the C++ library interface works.

What this program does¶

At the global level, this program is meant to do exactly the same thing as the serial example of the 3D Yee Scheme, but here we will divide the work among the available MPI processes. We consider Maxwell’s equations in a homogeneous, isotropic, dielectric material is given by

subject to the constraints

To discretize these equations using Yee’s scheme on a rectangular domain \([x_L, x_R] \times [y_L, y_R] \times [z_L, z_R]\) with mesh spacings of \(\Delta x\), \(\Delta y\), and \(\Delta z\), in the \(x\), \(y\), and \(z\) directions, respectively, we define

We choose a time step size, \(\Delta t\), satisfying

with the wave speed given by

Letting

the fields are approximated on the staggered space time grids:

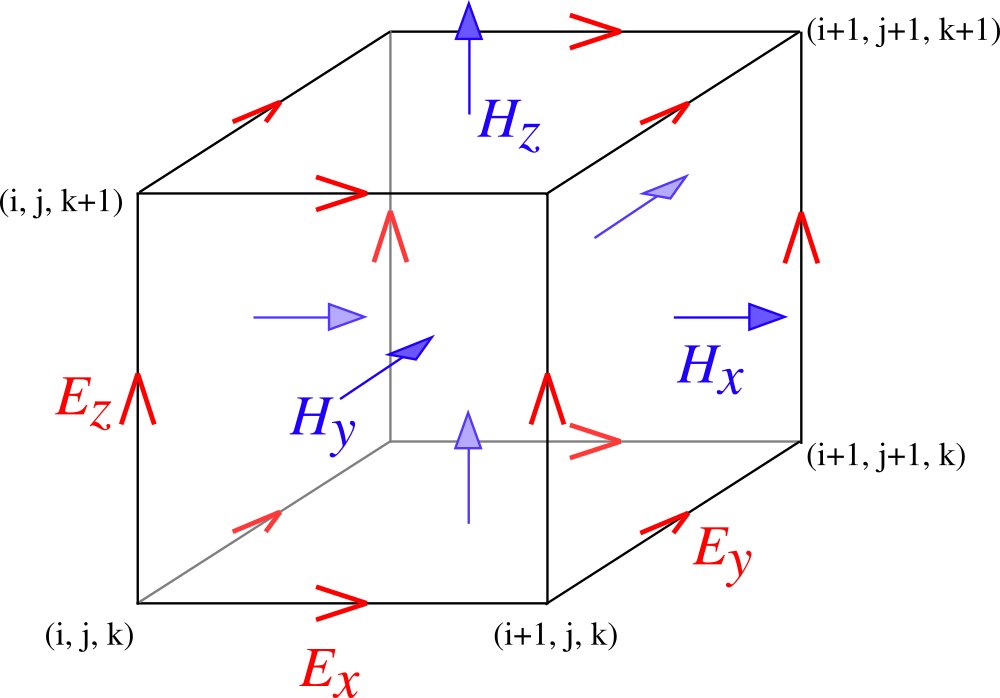

where we require that the domain is terminated such that the tangential E components and the normal H component are located on the boundaries. This corresponds to having an integer number of Yee cells that match the illustrated Spatial configuration of a Yee cell.

Spatial configuration of a Yee cell.¶

Finally, the fields are evolved with

We will use initial conditions generated from a Gaussian point source.

Class Declaration and Code Structure¶

First we briefly describe the definition of the yee_updater class that is declared in header file yee_mpi.hpp.

Include files¶

First we include the most generic C++ interface to the CRBC/DAB boundary conditions

#include "crbc_updates.hpp"

We include the header file for an exact solution routine so we can generate initial conditions and calculate errors

// Header file for exact solution routines

#include "solutions.hpp"

Next we include the standard template library vectors and arrays to minimize the amount of memory we have to directly manage

// for std::vector

#include <vector>

// for std::array

#include <array>

Finally, we include the Message Passing Interface library

// include MPI for parallel implementation

#include <mpi.h>

yee_updater class declaration¶

For simplicity, we declare a C++ class assuming that the number of processes will be a perfect cube and the domain will also be a cube. The primary inputs will be an MPI communicator, the number of processes to use, the width of the domain, the grid spacing, simulation time, and DAB parameters. The remaining inputs are primarily to modify the solution and output parameters.

class yee_updater

{

public:

/// constructor

/// \param[in] comm MPI communicator

/// \param[in] nprocs the number of processes to use in each direction

/// \param[in] w the approximate domain width (may be changed slighty due to discretization)

/// \param[in] n number of grid points

/// \param[in] T the total simulation time

/// \param[in] CRBC_T CRBC time parameter (usually CRBC_T = T)

/// \param[in] CRBC_P the number of CRBC recursions

/// \param[in] io_t approximately how often to generate output

/// \param[in] skip the stride to use when sampling errors

/// \param[in] eps permittivity

/// \param[in] mu permeability

/// \param[in] gamma roughty 1/variance of the Gaussian pulse used for ICs

/// \param[in] tau how far in the past the source pulse was turned on (> 0)

/// \param[in] dab_wt weight factor for DAB in load balancing

yee_updater(MPI_Comm comm,

const int &nprocs,

const double &w,

const int &n,

const double &T,

const double &CRBC_T,

const int &CRBC_P,

const double &io_t = 0.05,

const int &skip = 1,

const double &eps = 1.0,

const double &mu = 1.0,

const double &gamma = 160.0,

const double &tau = 0.35,

const int &dab_wt = 3);

virtual ~yee_updater();

Next we declare the remaining public functions. Notably, a function to run the simulation and one to free any memeroy associated with the MPI communicators. We also declare functions to print out some information about memory usage and timing data.

/// run the simulation

void run();

/// free the communicators created internally

void free_comms();

/// function to display the approximate memory usage in MB

void print_mem_use() const;

/// function to display timing information

void print_timing_data() const;

We declare most of the data structures we will use in the following. Here, we have everything that was in the serial example and we additionally have more arrays to store indexing and relational information for each of the MPI processes as well as buffers for the send and receives. We use std::vectors here so we don’t have to worry about freeing memory.

private:

// storage for field values

std::vector<double> E[3], H[3];

double Hcoef, Ecoef;

// storage for mpi messages. The lengths correspond to worst case situations

std::vector<double> E_sbuf[6];

std::vector<double> E_rbuf[6];

std::vector<double> E_edge_sbuf[12];

std::vector<double> E_edge_rbuf[12];

std::vector<double> DAB_sbuf[6];

std::vector<double> DAB_rbuf[6];

std::vector<double> DAB_corner_sbuf[12];

std::vector<double> DAB_corner_rbuf[12];

double eps, mu, gamma, tau, io_t, c;

double T, dt, h, Etime, Htime;

double tol, CRBC_T;

double coord[3], domain_width;

int nprocs, nprocs_cubed;

int nx, ny, nz;

int maxn;

int ntsteps;

int skip;

int dab_wt;

bool isBoundaryProc;

crbc::BoundaryProperties::Boundary procBounds[6];

int MPI_DIR[6];

int my_id, cart_rank[3];

std::vector<int> MPI_EDGE_DIR;

std::vector<int> send_dirs, send_mpi_dirs, send_sides[4], corner_mpi_dirs[12];

std::array<int, 3> send_corners[12];

std::vector< std::array<int, 2> > send_edges;

crbc::CrbcUpdates<3, double, int> bound_upd[3];

MPI_Comm grid_comm, glob_comm;

std::vector<MPI_Request> send_req, recv_req;

std::vector<MPI_Request> DAB_send_req, DAB_recv_req;

std::vector<int> DAB_props, rDAB_props;

std::vector<double> DAB_refl, rDAB_refl;

double create_comm_t, alloc_mem_t, init_dab_t, step_E_t, step_inner_H_t, \

step_outer_H_t, step_DAB_t, send_DAB_t, recv_DAB_t, send_E_t, \

recv_E_t, sol_t, load_init_conds_t, calc_norm_t, calc_err_t, calc_params_t;

double dab_mem_use;

maxwell_solutions::MW_FreeSpace sol_obj;

The next set of functions are used to set up the problem. These functions will try to load balance the problem (in a simple way), create the communicators, load initial conditions, and set up the boundary updaters.

/// function to calculate parameters and do some basic load balancing

void calc_params();

/// function to create the internal mpi comm. It also labels the boundaries.

void create_mpi_comm(MPI_Comm comm);

/// function to set up the solution routines

void init_solutions();

/// load the initial conditions

void load_initial_conds();

/// function to allocate memory

void allocate_memory();

/// function that sets up the DAB boundary updaters

void init_DAB();

The functions are essentially the same as the serial case and serve to update the E and H fields. We split the H-field updates to be for interior and boundary updates so we can do some updates while we wait for message passing to complete.

/// evolve the E fields

void step_E();

/// evolve the interior H fields

void step_inner_H();

/// evolve the H fields on the process boundaries

void step_outer_H();

/// update the DAB layers

void step_DAB();

/// copy the DAB updates back into the interior

void copy_DAB();

These functions are simply commonly used loops for copying data between the DAB library and the interior of our domain.

/// often used loop that copies data from the DAB to the interior

void get_dab_vals_loop(std::vector<double> &buffer,

crbc::CrbcUpdates<3, double, int> &updater,

const int &side,

const int *low,

const int *high,

const int *plow,

const int *phigh,

const bool &isedge = false);

/// often used loop that copies data from the interior to the DAB

void set_dab_vals_loop(std::vector<double> &buffer,

crbc::CrbcUpdates<3, double, int> &updater,

int &count,

const int &side,

const int *low,

const int *high,

const int *plow,

const int *phigh,

const bool &isedge = false);

The bulk of the new code is in the following functions which handle the sending and receiving of the MPI messages for the boundary values and the field values.

/// function to indentify the sides and edges that need to be sent to update

/// the DAB layer

void calc_DAB_send_params();

/// send DAB values between processes

void send_DAB();

/// recieve DAB values from neighboring processes

void recv_DAB();

/// send E field values to neighboring processes

void send_E();

/// recieve E field values form neighboring processes

void recv_E();

Finally, we have functions to compute the norm and error of the solutions.

/// calculate the norm at the current time

double calc_norm();

/// calculate the error at the current time

double calc_error();

Class Definitions¶

The definitions for the yee_updater class are implemented in the file yee_mpi.cpp.

Includes¶

We begin by including the header file containing the class declarations:

#include "yee_mpi.hpp"

Next we include the routines needed to produce output and calculate the error and norms

// Needed for C++ output

#include <iostream>

// This has the declarations of the 'sqrt' and 'abs' functions

#include <cmath>

// We use this for std::accumulate

#include <numeric>

Finally, we optionally include OpenMP to allow for threading

// optional OpenMP

#if USE_OPENMP

#include <omp.h>

#endif

Constructor¶

The primary function of the constructor is to set up all of the problem parameters. It expects to receive the MPI communicator to use, the number of process to use in each direction (nprocs is the side length, not the total number), the width of the domain, the number of grid points, and the simulation time. It additionally takes the DAB/CRBC time parameter (usually this should be the same as the simulation time) and the number of recursions to use. The remaining parameters are optional and set the output time and sampling frequency and the solution parameters. There is also a parameter to adjust how to scale the work in the DAB layers for load balancing purposes (in experiements choosing this to be 3-6 seems to work well in most cases).

We copy these inputs and set all the timer variables to 0. Note that the majority of the MPI barriers in this section of code are unnecessary and are only here to try to help isolate potential problems in the code.

yee_updater::yee_updater(MPI_Comm comm,

const int &nprocs,

const double &w,

const int &n,

const double &T,

const double &CRBC_T,

const int &CRBC_P,

const double &io_t,

const int &skip,

const double &eps,

const double &mu,

const double &gamma,

const double &tau,

const int &dab_wt)

{

// save inputs

this->T = T;

this->CRBC_T = CRBC_T;

this->CRBC_P = CRBC_P;

domain_width = w;

this->nprocs = nprocs;

n_global = n;

this->io_t = io_t;

this->skip = skip;

this->eps = eps;

this->mu = mu;

this->gamma = gamma;

this->tau = tau;

this->dab_wt = dab_wt;

// compute the wave speed;

c = 1.0 / std::sqrt(eps*mu);

// make a copy of the communicator

MPI_Comm_dup(comm, &glob_comm);

// initialize timers to 0

calc_params_t = create_comm_t = alloc_mem_t = init_dab_t = step_E_t = \

step_inner_H_t = step_outer_H_t = step_DAB_t = send_DAB_t = \

recv_DAB_t = send_E_t = recv_E_t = sol_t = load_init_conds_t = \

calc_norm_t = calc_err_t = 0.0;

Then, we set up a cartesian communicator and calculate the parameters for the simulation and load balancing. The functions create_mpi_comm and calc_params are described in more detail below, but the basic idea is to create a topologically aware communicator so each process knows its position in the domain and to try to distrubute the work to each process in an equitable manner while taking into account the fact that the DAB updates are more expensive than the Yee updates.

// create cartesian communicator

create_mpi_comm(glob_comm);

// calculate grid and time step size

if (grid_comm != MPI_COMM_NULL) {

try {

calc_params();

} catch (const std::exception& e) {

std::cerr << "id = " << my_id

<< " failed in calc_params() --- a standard exception was caught, with message '"

<< e.what() << "'" << std::endl;

MPI_Abort(glob_comm, -2);

}

// wait for all processes to finish calculating parameters

MPI_Barrier(grid_comm);

}

Next we allocate the bulk of the memory for the field values. Note that we do not allocate the send and receive buffers here (it would be a good idea to do so).

// allocate memory

if (grid_comm != MPI_COMM_NULL) {

try {

allocate_memory();

} catch (const std::exception& e) {

std::cerr << "id = " << my_id

<< " failed in allocate_memory() --- a standard exception was caught, with message '"

<< e.what() << "'" << std::endl;

MPI_Abort(glob_comm, -2);

}

// wait for all processes to finish allocating memory

MPI_Barrier(grid_comm);

}

We now initialize the boundary updater objects using the DAB library

// initialize DAB updaters

if (grid_comm != MPI_COMM_NULL) {

try {

init_DAB();

} catch (const std::exception& e) {

std::cerr << "id = " << my_id

<< " failed in init_DAB() --- a standard exception was caught, with message '"

<< e.what() << "'" << std::endl;

MPI_Abort(glob_comm, -2);

}

// wait for all processes to set up DAB updaters (if needed)

MPI_Barrier(grid_comm);

}

Finally, we set up the solution routine. The solution we will use is described in the numerical results page and the code is availabe at solutions.cpp and solutions.hpp.

// set up the solution routine

if (grid_comm != MPI_COMM_NULL) {

try {

init_solutions();

} catch (const std::exception& e) {

std::cerr << "id = " << my_id

<< " failed in init_solutions() --- a standard exception was caught, with message '"

<< e.what() << "'" << std::endl;

MPI_Abort(glob_comm, -2);

}

// wait for all processes to set up solution routines

MPI_Barrier(grid_comm);

}

Routine to run the Simulation¶

The following routine is responsible for running the actual simulation. It starts by determining how many time steps to take between outputs and reserves memory to save these outputs. Then the initial conditions are load and we calculate the norm of the initial data so we can calculate relative errors later.

void yee_updater::run()

{

double loc_norm, glob_norm, loc_err, glob_err;

int tskip;

int tstep;

std::vector<double> err;

std::vector<double> time;

// figure out how many time steps should be taken between outputs

tskip = io_t/dt;

// reserve memory for errors

if (my_id==0) {

err.reserve((ntsteps / tskip) + 1);

time.reserve((ntsteps / tskip) + 1);

}

// load initial conditions

if (grid_comm != MPI_COMM_NULL) {

try {

load_initial_conds();

} catch (const std::exception& e) {

std::cerr << "id = "

<< my_id << " failed in load_initial_conds() --- a standard exception was caught, with message '"

<< e.what() << "'" << std::endl;

MPI_Abort(glob_comm, -2);

}

MPI_Barrier(grid_comm);

}

// calculate norm of intitial conditions

if (grid_comm != MPI_COMM_NULL) {

try {

loc_norm = calc_norm();

} catch (const std::exception& e) {

std::cerr << "id = " << my_id

<< " failed in calc_norm() --- a standard exception was caught, with message '"

<< e.what() << "'" << std::endl;

MPI_Abort(glob_comm, -2);

}

MPI_Barrier(grid_comm);

}

Next we collect the norm (squared) from each of the processes onto a single process using MPI_Reduce.

// use mpi_reduce to calculate global norm

glob_norm = 0.0;

if (grid_comm != MPI_COMM_NULL) {

if (MPI_Reduce(&loc_norm, &glob_norm, 1, MPI_DOUBLE, MPI_SUM, 0, grid_comm) != MPI_SUCCESS)

std::cerr << "MPI_Reduce failed, norm calculation" << std::endl;

}

if (my_id == 0) {

glob_norm = std::sqrt(glob_norm);

}

Next we actually run the simulation by time stepping. The idea here is that we have discretized the problem in such a way that we can update all of the internal E-Field values, so we do that first. After the internal E-field values are updated we send any values needed on the boundaries between processes and begin updating exterior boundaries using the DAB library. We send the DAB values as soon as they are available and then update the internal H-field while waiting to receive the E-field needed to update the boundaries. After receiving the E-field and DAB updates from neighboring processes, we update the H-fields along the boundaries. We repeat this process and calculate the error at the requesting intervals until we reach the final time.

// time step

if (grid_comm != MPI_COMM_NULL) {

for (tstep = 0; tstep < ntsteps; ++tstep) {

// generate output

if (tstep % tskip == 0) {

// calculate error

loc_err = calc_error();

glob_err = 0.0;

if (MPI_Reduce(&loc_err, &glob_err, 1, MPI_DOUBLE, MPI_SUM, 0, grid_comm) != MPI_SUCCESS)

std::cerr << "MPI_Reduce failed, err calculation" << std::endl;

if (my_id==0) {

std::cout << "tstep = " << tstep << " T (E) = " << Etime

<< " err = " << std::sqrt(glob_err)

<< " rel err = " << std::sqrt(glob_err)/glob_norm << std::endl;

err.push_back(std::sqrt(glob_err));

time.push_back(Etime);

}

} // output

// update E fields

step_E();

// Send the E fields

send_E();

// update the DAB

step_DAB();

// update the current E time

Etime += dt;

// Send the DAB values

send_DAB();

// update the H fields

step_inner_H();

// wait for the E field sends to complete

recv_E();

// wait for the DAB sends to complete

recv_DAB();

// get the updated boundary values from the DAB updaters

copy_DAB();

// update the boundary H fields

step_outer_H();

// increment H time

Htime += dt;

MPI_Barrier(grid_comm);

} // end time stepping

Ater the time stepping is complete, we compute the error at the final time and output all of the error data again in a comma seperated list that is easily imported into other programs.

// calculate final error

loc_err = calc_error();

glob_err = 0.0;

if (MPI_Reduce(&loc_err, &glob_err, 1, MPI_DOUBLE, MPI_SUM, 0, grid_comm) != MPI_SUCCESS)

std::cerr << "MPI_Reduce failed, err calculation" << std::endl;

if (my_id == 0) {

err.push_back(std::sqrt(glob_err));

time.push_back(Etime);

std::cout << "tstep = " << tstep << " T (E) = " << Etime

<< " err = " << std::sqrt(glob_err)

<< " rel err = " << std::sqrt(glob_err)/glob_norm << std::endl;

std::cout << std::endl << std::endl;

// print out all the errors again that are easier to import

std::cout << "time, error, relative error," << std::endl;

for (unsigned i=0; i<err.size(); ++i)

std::cout << time[i] << ", " << err[i] << ", " << err[i]/glob_norm

<< std::endl;

Function to Free Communicators¶

To avoid memory leaks, we define a function to free the communicators. We note that it may be better practice to have the class call MPI_Init and put the following code along with a call to MPI_Finalize in the class destructor.

void yee_updater::free_comms()

{

MPI_Comm_free(&glob_comm);

MPI_Comm_free(&grid_comm);

}

Function to Print Approximate Memory Usage¶

The following function is potentially useful in analyzing the load balancing and reports a list of how much memory is used by each process.

void yee_updater::print_mem_use() const

{

if (grid_comm != MPI_COMM_NULL) {

double tot_mem_use, dab_buff, ebuff, fields;

// calculate the size of the field vectors in MB

fields = sizeof(double)*(E[0].capacity() + E[1].capacity() + E[2].capacity() +

H[0].capacity() + H[1].capacity() + H[2].capacity()) / ((double) 1024*1024);

// calculate the size of the E send/recieve buffers

ebuff = 0;

for (int i=0; i<6; ++i)

ebuff += sizeof(double)*(E_sbuf[i].capacity() + E_rbuf[i].capacity()) \

/ ((double) 1024*1024);

// calculate the size of the DAB buffers

dab_buff = 0;

for (int i=0; i<6; ++i)

dab_buff += sizeof(double)*(DAB_sbuf[i].capacity() + DAB_rbuf[i].capacity()) \

/ ((double) 1024*1024);

// calculate total mem use (dab_mem_use is calculate in init_DAB())

tot_mem_use = dab_buff + ebuff + fields + dab_mem_use;

// colect everything on 1 process

std::vector<double> send, recv;

send.push_back(dab_buff);

send.push_back(ebuff);

send.push_back(fields);

send.push_back(dab_mem_use);

send.push_back(tot_mem_use);

recv.assign(nprocs_cubed*5, 0.0);

if (MPI_Gather(send.data(), 5, MPI_DOUBLE, recv.data(), 5, MPI_DOUBLE, 0, grid_comm) != MPI_SUCCESS)

std::cerr << "MPI_Gather failed " << std::endl;

if (my_id == 0) {

std::cout << " , DAB Buffers, E Buffers, Fields, DAB, Total" << std::endl;

for (int l=0; l<nprocs_cubed; ++l) {

std::cout << "proc " << l << ", ";

for (int i=0; i<5; ++i)

std::cout << recv[5*l + i] << ", ";

std::cout << std::endl;

}

std::cout << "Total, ";

for (int i=0; i<5; ++i) {

double tot = 0;

for (int l=0; l<nprocs_cubed; ++l)

tot += recv[5*l + i];

std::cout << tot << ", ";

}

std::cout << std::endl;

} // end my_id == 0

} // end if grid_comm != MPI_COMM_NULL

} // end print_mem_use()

Function to Print Timing Data¶

This function prints a list of time each process spends in certain sections of the code both in seconds and as a percentage. The first table is the actual times and the second table is the percentage of time spend in each section. Note the row totals can be viewed as wall times and the column totals are CPU times. Again, this function is potentially useful in analyzing the load balancing.

void yee_updater::print_timing_data() const

{

if (grid_comm != MPI_COMM_NULL) {

std::vector<double> timers_send, timers_recv;

timers_send.reserve(15);

timers_recv.assign(15*nprocs_cubed, 0.0);

// save all the local timers to a vector

timers_send.push_back(calc_params_t);

timers_send.push_back(create_comm_t);

timers_send.push_back(alloc_mem_t);

timers_send.push_back(init_dab_t);

timers_send.push_back(step_E_t);

timers_send.push_back(step_inner_H_t);

timers_send.push_back(step_outer_H_t);

timers_send.push_back(step_DAB_t);

timers_send.push_back(send_DAB_t);

timers_send.push_back(recv_DAB_t);

timers_send.push_back(send_E_t);

timers_send.push_back(recv_E_t);

timers_send.push_back(load_init_conds_t);

timers_send.push_back(calc_norm_t);

timers_send.push_back(calc_err_t);

// gather all timer data

MPI_Gather(timers_send.data(), timers_send.size(), MPI_DOUBLE, timers_recv.data(), timers_send.size(), MPI_DOUBLE, 0, grid_comm);

// print out timer data

if (my_id == 0) {

std::cout << std::endl << std::endl;

std::cout << " ,"

<< " Calculating Parameters,"

<< " Creating Communicators,"

<< " Allocating Memory,"

<< " Initializing DABs,"

<< " Stepping E,"

<< " Stepping Inner H,"

<< " Stepping Outer H,"

<< " Stepping DAB,"

<< " Sending DAB,"

<< " Receiving DAB,"

<< " Sending E,"

<< " Receiving E,"

<< " Loading Initial Condition,"

<< " Calculating Norm,"

<< " Calculating Error,"

<< " Total "

<< std::endl;

timers_send.assign(16, 0.0);

for (int i = 0; i<nprocs_cubed; ++i) {

double sum = std::accumulate(timers_recv.begin() + 15*i, timers_recv.begin() + 15*(i+1), 0.0);

// print timer data for each process

std::cout << " process " << i << ",";

for (int j=0; j<15; ++j)

std::cout << timers_recv[15*i+j] << ",";

std::cout << sum << std::endl;

// update total times

for (int j=0; j<15; ++j)

timers_send[j] += timers_recv[15*i + j];

timers_send[15] += sum;

}

std::cout << " Total " << ",";

for (int j=0; j<15; ++j)

std::cout << timers_send[j] << ",";

std::cout << timers_send[15] << std::endl << std::endl;

// compute percentages

timers_send.assign(16, 0.0);

for (int i = 0; i<nprocs_cubed; ++i) {

std::cout << " process " << i << ",";

for (int j=0; j<15; ++j)

std::cout << 100*timers_recv[15*i + j] / std::accumulate(timers_recv.begin() + 15*i, timers_recv.begin() + 15*i + 15, 0.0) << ",";

std::cout << "100" << std::endl;

// total percentages

for (int j=0; j<15; ++j)

timers_send[j] += timers_recv[15*i+j] / std::accumulate(timers_recv.begin() + 15*i, timers_recv.begin() + 15*i + 15, 0.0);

timers_send[15] += 1;

}

std::cout << " Average " << ",";

for (int j=0; j<15; ++j)

std::cout << 100*timers_send[j]/nprocs_cubed << ",";

std::cout << std::endl << std::endl;

} // end if id == 0

} // end comm check

} // end print_timing_data

Routine to Calculate Parameters¶

The main purpose of this routine is to do some basic load balancing. The idea that using P auxilliary variables is roughly equal (in terms of FLOPS) to doing ~3*P Yee cell updates, so we will make the processes with DAB layers have fewer Yee updates to compute but otherwise distribute the points as evenly as possible. Note that the difference between the DAB and Yee memory access patterns probably plays a role here, but we’re ignoring it.

Also note that we are overlaping the processor domains by a factor of h. This is certainly less memory efficient, but it makes the message passing and updates a bit more straightforward.

We begin by defining the bottom left corner of the domain, which we assume to be a cube centered around 0 and compute the grid spacing.

void yee_updater::calc_params()

{

int x, i, j, k, rem, n[3], P;

double t1, t2;

t1 = MPI_Wtime(); // start timer

// use the requested number of recursoins

P = CRBC_P;

// compute the left-most point in each coordinate direction

coord[0] = -domain_width/2.0;

coord[1] = -domain_width/2.0;

coord[2] = -domain_width/2.0;

h = domain_width/((double) (n_global - 1.0));

Next, if we only have a single process we just compute the number of grid points on that process by setting them to the number of points requested.

if (nprocs == 1) {

// if there's only 1 MPI process, we just compute the number of grid points

// like normal

for (i=0; i<3; ++i)

n[i] = n_global;

Otherwise, we start by calculating the total number of “grid points” assuming that each additional auxilliary variable in the DAB counts for \(dab\_wt\) grid points. We additionally assume that each of the exterior boundaries is in fact a DAB, that is we assume the free space problem. We also assume the grid overlaps by a factor of h so processes share 2 grid points with their neighbors. That is, we want the domain to look something like

...... ........... ........... ......

........... ........... ...........

So if we had a single process we would have n_global points plus there are 2 DABS, which give \(2 \cdot P \cdot dab\_wt\) points plus we have the extra points due to overlapping the grid, which gives \(2(nprocs-1)\) points putting this all together, we get that we need a total of \(n\_global + 2 \cdot P \cdot dab\_wt + 2 \cdot nprocs -2\). We try to divide this up evenly and calculate how many points are left over.

} else {

//

// IMPORTANT:

// This can fail by making processes have too few points on the boundary or

// or even assigning a negative number of points on the boundary processes.

// This isn't really accounted for, but we attempt to abort if we see it ...

x = ((int) (n_global + 2*dab_wt*P + 2*(nprocs) - 2)) / nprocs;

rem = ((int) (n_global + 2*dab_wt*P + 2*(nprocs) - 2)) % nprocs;

// Next we allocate points to processes in each direction bases on whether

// they are on the boundary or not

for (i=0; i<3; ++i) {

if ((cart_rank[i] == 0) || (cart_rank[i] == nprocs-1)) {

// if the process is on the boundary, we subtract of dab_wt*P points

// to account for the DAB layer. We additionally calculate the left

// most coordinate in this direction. Note that on the left side, we

// have already correctly set this value so we only do it if it is the

// right-most process

n[i] = x - dab_wt*P;

if (cart_rank[i] == nprocs-1) {

coord[i] += (x - dab_wt*P - 2)*h + (cart_rank[i]-1)*(x-2)*h;

}

} else {

// otherwise, we just assign the number of points as is and calculate

// the left-most point of the process in the current direction

n[i] = x;

coord[i] += (x - dab_wt*P - 2)*h + (cart_rank[i]-1)*(x-2)*h;

}

}

It is possible that the number of “points” generated above does not divide evenly across the processes. In this case, we just loop over the interior processes and add one point to the current process and adjust the physical coordinates of each process until we have used all of the extra points.

// now account for any left over points

if (nprocs == 2) {

// if there are only 2 processes per direction, just add the extra point(s)

// to the left process and shift the right process' coordinates accordingly.

for (i=0; i<3; ++i)

if (cart_rank[i] == 0) {

n[i] += rem;

} else {

coord[i] += rem*h;

}

} else {

// otherwise we only add extra points to the interior processes. We do

// this by looping over the interior processes from left to right and

// add one to the current process and the coordinates by h for all of the

// processes to the right and repeat until we have no remaining points.

int r[3];

r[0] = rem;

r[1] = rem;

r[2] = rem;

// loop over the number of remaining points just to make sure we iterate

// enough times

for (k=0; k<rem; ++k) {

// loop over the interior processes

for (j=1; j<nprocs-1; ++j) {

// loop over the directions

for (i=0; i<3; ++i) {

// if we have points left, add one to the current process and shift

// the process coordinates for the processes to the right

if (r[i] > 0) {

if (cart_rank[i] == j) {

n[i]++;

}

if (cart_rank[i] > j)

coord[i] += h;

}

r[i]--;

}

}

} // end for k

}

}

Finally, we do a simple check to make sure that the grid partitioning is somewhat reasonable in the sense that each process will have at least 1 internal point. We also calculate the time step size and set the initial times.

// do a basic check to make sure that the grid partitioning is somewhat

// reasonable

if ((n[0] < 3) || (n[1] < 3) || (n[2] < 3)) {

std::cerr << "Grid partitioning failed. Try increasing n, decrreasing dab_wt, and/or nprocs" << std::endl;

MPI_Abort(glob_comm, -3);

}

// save the number of grid points in each direction

nx = n[0];

ny = n[1];

nz = n[2];

// calculate the time step size and number of time steps

dt = 0.99 / sqrt(3.0/(h*h));

ntsteps = T / dt;

Etime = 0;

Htime = dt/2.0;

// update timer

t2 = MPI_Wtime();

calc_params_t += t2-t1;

Function to Create a MPI Communicator¶

This function creates a topologically aware MPI communicator and figures out the neighbors and boundary conditions on each process. We start by creating the a Cartesian communicator using the build in MPI function.

void yee_updater::create_mpi_comm(MPI_Comm comm) {

int periods[3], i, j, diag_coords[3], diag_rank;

periods[0] = 0; // not periodic

periods[1] = 0;

periods[2] = 0;

int reorder = 1;

double t1, t2;

t1 = MPI_Wtime(); // start timer

int dims[3];

dims[0] = nprocs;

dims[1] = nprocs;

dims[2] = nprocs;

nprocs_cubed = nprocs*nprocs*nprocs;

// create a cartesian communicator with nprocs in each direction

if (MPI_Cart_create(comm, 3, dims, periods, reorder, &grid_comm) != MPI_SUCCESS) {

std::cerr << "MPI_Cart_create failed" << std::endl;

}

Next we figure out the the neighboring processes in each direction using the MPI_Cart_shift function.

// figure out neighboring processes

for (int i=0; i<6; ++i)

MPI_DIR[i] = MPI_PROC_NULL;

cart_rank[0] = -1;

cart_rank[1] = -1;

cart_rank[2] = -1;

if (grid_comm != MPI_COMM_NULL) {

if (MPI_Comm_rank(grid_comm, &my_id) != MPI_SUCCESS)

std::cerr << "MPI_Comm_rank failed" << std::endl;

if (MPI_Cart_coords(grid_comm, my_id, 3, cart_rank) != MPI_SUCCESS)

std::cerr << "MPI_Cart_coords failed" << std::endl;

// figure the ids of the processes we might need to send data to

if (MPI_Cart_shift(grid_comm, 2, -1, &MPI_DIR[5], &MPI_DIR[4]) != MPI_SUCCESS)

std::cerr << "MPI_Cart_shift failed" << std::endl;

if (MPI_Cart_shift(grid_comm, 1, -1, &MPI_DIR[3], &MPI_DIR[2]) != MPI_SUCCESS)

std::cerr << "MPI_Cart_shift failed" << std::endl;

if (MPI_Cart_shift(grid_comm, 0, -1, &MPI_DIR[1], &MPI_DIR[0]) != MPI_SUCCESS)

std::cerr << "MPI_Cart_shift failed" << std::endl;

}

Then, we figure out which of the processes are on the boundary. We do this based

on whether or not the process has a neighbor, but it could also be done using the

coordinates of the processes on the Cartesian communicator. We are assuming the

free space problem, so in each direction we label the boundary to by type CRBC

for exterior boundaries and NONE for interior boundaries shared with another

process.

// figure out which processes are on the boundary

isBoundaryProc = false;

// if a process doesn't have a neighbor on at least 1 side, its on the boundary

if ((grid_comm != MPI_COMM_NULL) && ((MPI_DIR[0] == MPI_PROC_NULL) ||

(MPI_DIR[1] == MPI_PROC_NULL) || (MPI_DIR[2] == MPI_PROC_NULL) ||

(MPI_DIR[3] == MPI_PROC_NULL) || (MPI_DIR[4] == MPI_PROC_NULL) ||

(MPI_DIR[5] == MPI_PROC_NULL)))

isBoundaryProc = true;

// label the boundaries. Use type NONE for interior sides and CRBC for the

// exterior boundaries. To do a wave guide, e.g., one might change the type

// to DIR on the appropriate sides

for (int i=0; i<6; ++i) {

procBounds[i] = crbc::BoundaryProperties::NONE;

if ((grid_comm != MPI_COMM_NULL) && (MPI_DIR[i] == MPI_PROC_NULL))

procBounds[i] = crbc::BoundaryProperties::CRBC;

}

Finalling, we figure out which edges are shared between processes so we can send data diagonally to complete the edge updates.

// figure out if we need to send any edge data diagonally

if (grid_comm != MPI_COMM_NULL) {

// loop over sides

for (i=0; i<5; i++) {

// get a second side to check

for (j=i+1; j<6; j++) {

// make sure the sides are not parallel

if (j/2 != i/2) {

if ((MPI_DIR[i] != MPI_PROC_NULL) && (MPI_DIR[j] != MPI_PROC_NULL)) {

send_edges.push_back({i, j});

// get rank of destination

for (int l=0; l<3; ++l)

diag_coords[l] = cart_rank[l];

// shift coordinate for first side

diag_coords[i/2] = (i%2 == 0) ? cart_rank[i/2]-1 : cart_rank[i/2]+1;

// shift coordinate for second side

diag_coords[j/2] = (j%2 == 0) ? cart_rank[j/2]-1 : cart_rank[j/2]+1;

if (MPI_Cart_rank(grid_comm, diag_coords, &diag_rank) != MPI_SUCCESS)

std::cerr << "MPI_Cart_rank failed" << std::endl;

MPI_EDGE_DIR.push_back(diag_rank);

}

}

}

}

}

// stop timer

t2 = MPI_Wtime();

create_comm_t += t2-t1;

Function to Initialize Solutions¶

Here, we define a function which initializes the solution routines. We simply provide a source location, which we place at (0,0,0) and we give it the grid spacing, material parameters, \(\mu\) and \(\varepsilon\), and the parameters that determine the width and start time of the Gaussian pulse, \(\gamma\) and :math`tau`.

void yee_updater::init_solutions()

{

double src_loc[3];

double hloc[3];

// place the source at (0,0,0)a small perturbation to decrease the

// chances of coinciding with a grid point may be needed. If the source is on a grid

// point there is the possiblity of a division by zero in the solution

// routines

src_loc[0] = 0.0;

src_loc[1] = 0.0;

src_loc[2] = 0.0;

// set the grid spacing to be the same in all directions

hloc[0] = h;

hloc[1] = h;

hloc[2] = h;

// initialize the solution object

sol_obj = maxwell_solutions::MW_FreeSpace(gamma, tau, eps, mu, src_loc);

sol_obj.set_grid_spacing(hloc);

}

Function to Allocate Memory¶

This function allocates memory for each of the field components. We note it would likely be a good idea to allocate the send and receive buffers here as well.

void yee_updater::allocate_memory()

{

int m;

double t1, t2;

// start timer

t1 = MPI_Wtime();

// figure out the largest number number of grid points possible

m = (nx > ny) ? nx : ny;

m = (m > nz) ? m : nz;

if (MPI_Allreduce(&m, &maxn, 1, MPI_INT, MPI_MAX, grid_comm) != MPI_SUCCESS)

std::cerr << "MPI_Allreduce failed";

// allocate Fields and initialize to 0

E[0].assign((nx-1)*ny*nz, 0.0); // Ex

E[1].assign(nx*(ny-1)*nz, 0.0); // Ey

E[2].assign(nx*ny*(nz-1), 0.0); // Ez

H[0].assign(nx*(ny-1)*(nz-1), 0.0); // Hx

H[1].assign((nx-1)*ny*(nz-1), 0.0); // Hy

H[2].assign((nx-1)*(ny-1)*nz, 0.0); // Hz

// compute update coefficients

Hcoef = (dt/mu)/h;

Ecoef = (dt/eps)/h;

// stop timer

t2 = MPI_Wtime();

alloc_mem_t += t2-t1;

}

Function to Initilize the DAB Layer¶

The purpose of this function is to set up objects to provide the exterior boudnary updates using the DAB library. Again, we are assuming this is a free space problem, so we initialize DAB updaters for each of the 3 E-field components. Note, in a wave guide, for example, where there are no DAB edges or corners, we only need updaters for the tangential components. There’s no harm in having an updater for the normal components when it’s not needed, but it represents unnecessary work.

We overlap the DAB domains so that each process can update the information it needs to continue with the DAB library. If we did not overlap the domains, we would need to pass data used in the DAB library between neighboring processes at the wave equation stage of the DAB update. This is possible to do, but it would require changing the inner workings of the DAB library.

We start by creating storage for various properties of the DAB that we may want to print out such as the error estimates.

void yee_updater::init_DAB()

{

double delta;

int l,m;

int low[3], high[3];

double htmp[3];

htmp[0] = htmp[1] = htmp[2] = h;

double t1, t2;

// start timer

t1 = MPI_Wtime();

// storage for DAB boundary properties that we might want to print out or need

// to use elsewhere

if (my_id == 0) {

rDAB_props.assign(15*nprocs_cubed, 0.0);

rDAB_refl.assign(10*nprocs_cubed, 0.0);

}

DAB_props.assign(15, 0.0);

DAB_refl.assign(10, 0.0);

DAB_refl[6] = coord[0];

DAB_refl[7] = coord[1];

DAB_refl[8] = coord[2];

Next we check to see of the process is on the boundary. If it is, we initialize a boundary updater object for each of the 3 E-field components. Here, we are thinking of Ex = 0, Ey = 1, and Ez = 2. We provide the CRBC time parameter, the grid spacing, time step size, wave speed, and the processor boundary types.

if (isBoundaryProc) {

// We initialize the updaters in 3D with double field values and ints for

// indexing (and by default doubles for coeficients) and provide the run

// time, grid spacing, time step size, wave speed, and boundary configuration

// on each boundary process

bound_upd[0] = crbc::CrbcUpdates<3, double, int> (CRBC_T, htmp, dt, c, procBounds);

bound_upd[1] = crbc::CrbcUpdates<3, double, int> (CRBC_T, htmp, dt, c, procBounds);

bound_upd[2] = crbc::CrbcUpdates<3, double, int> (CRBC_T, htmp, dt, c, procBounds);

Then, we loop over and set up each of the possible boundary sides.

We are dealing with the message passing by overlapping the DAB layer for “simplicity.” We recall that we over lapped the Yee grid so we an overlap of 2 grid points for tangential components, so the grid looks like

Tangential components Normal Components

-------- ---x-----x-----x---

x x x | | |

-------- ---x-----x-----x---

-------- ---x-----x-----x---

x x x | | |

-------- ---x-----x-----x---

Note that the use of normal and tangential components here is somewhat confusing because it is in reference to the boundaries with neighboring processes, NOT the phyiscal boundary. We consider the direction in which the message passing needs to take place as the normal direction. This results in the following, e.g.

With our implementation, each process has components with the following indexing bounds:

Ex(0:nx-2, 0:ny-1, 0:nz-1) located at ((i+1/2)*h, j*h, k*h)

Ey(0:nx-1, 0:ny-2, 0:nz-1) located at (i*h, (j+1/2)*h, k*h)

Ez(0:nx-1, 0:ny-1, 0:nz-2) located at (i*h, j*h, (k+1/2)*h)

So, for example, we’ll consider the right boundary face in the x direction. Then, we potentially need to pass information North/South in the y-direction) or Up/Down (in the z-direction). For the case of needed to pass information in the North/South direction, the Ey component is normal to the interface between the two processes and Ex and Ez are tangential. The tangential components are already overlapped the way we want because we overlapped the grids for the Yee scheme, therefore, we tell the DAB updater the actual data extents for the points:

For Ex the proper extents are [nx-3, nx-2] x [0, ny-1] x [0, nz-1] because we include all the points in the y and z directions and the point in x on the physical boundary and it’s neighbor to the left. Similary, for Ez the extents are [nx-2, nx-1] x [0, ny-1] x [0, nz-2].

For Ey, if we do the same thing, we would get the extents nx-2, nx-1] x [0, ny-2] x [0, nz-1], but this does not overlap the grids by 2 points. To correct this, we tell the DAB layer that the extents are greater than the actual data range by subtracting 1 from the lower y extent if their is a neighboring process in the MPI_DIR[4] direction to get [nx-2, nx-1] x [-1, ny-2] x [0, nz-1].

NOTE: the DAB updater considers the extents to be inclusive.

for (l=0; l<6; ++l) {

if (procBounds[l] == crbc::BoundaryProperties::CRBC) {

// figure out seperation from source

delta = domain_width / 2.0;

// loop over field components

for (m=0; m<3; ++m) {

// generic extents that are close to correct (some of the indices are

// off by a factor of 1, which depends on the component)

low[0] = 0;

low[1] = 0;

low[2] = 0;

high[0] = nx - 1;

high[1] = ny - 1;

high[2] = nz - 1;

if (l == 0) {

// left boundary in x, need [0,1] in x, all in y, z

high[0] = 1;

// adjust based on field component

if (m == 1) { // Ey

high[1]--;

if (MPI_DIR[2] != MPI_PROC_NULL)

low[1]--;

} else if (m == 2) { // Ez

high[2]--;

if (MPI_DIR[4] != MPI_PROC_NULL)

low[2]--;

}

} else if (l == 1) {

// right boundary in x, need [nx-2, nx-1] in x, all y, z

low[0] = nx-2;

// adjust based on field component

if (m == 0) {

high[0]--;

low[0]--;

} else if (m == 1) { // Ey

high[1]--;

if (MPI_DIR[2] != MPI_PROC_NULL)

low[1]--;

} else if (m == 2) { // Ez

high[2]--;

if (MPI_DIR[4] != MPI_PROC_NULL)

low[2]--;

}

} else if (l == 2) {

// left boundary in y, need [0,1] in y, all in x, z

high[1] = 1;

// adjust based on field component

if (m == 0) { // Ex

high[0]--;

if (MPI_DIR[0] != MPI_PROC_NULL)

low[0]--;

} else if (m == 2) { // Ez

high[2]--;

if (MPI_DIR[4] != MPI_PROC_NULL)

low[2]--;

}

} else if (l == 3) {

// right boundary in y, need [ny-2, ny-1] in y, all x, z

low[1] = ny-2;

// adjust based on field component

if (m == 1) {

high[1]--;

low[1]--;

} else if (m == 0) { // Ex

high[0]--;

if (MPI_DIR[0] != MPI_PROC_NULL)

low[0]--;

} else if (m == 2) { // Ez

high[2]--;

if (MPI_DIR[4] != MPI_PROC_NULL)

low[2]--;

}

} else if (l == 4) {

// left boundary in z, need [0,1] in z, all in x, y

high[2] = 1;

// adjust based on field component

if (m == 0) { // Ex

high[0]--;

if (MPI_DIR[0] != MPI_PROC_NULL)

low[0]--;

} else if (m == 1) { // Ey

high[1]--;

if (MPI_DIR[2] != MPI_PROC_NULL)

low[1]--;

}

} else if (l == 5) {

// right boundary in z, need [nz-2, nz-1] in z, all x, y

low[2] = nz-2;

// adjust based on field component

if (m == 2) {

high[2]--;

low[2]--;

} else if (m == 0) { // Ex

high[0]--;

if (MPI_DIR[0] != MPI_PROC_NULL)

low[0]--;

} else if (m == 1) { // Ey

high[1]--;

if (MPI_DIR[2] != MPI_PROC_NULL)

low[1]--;

}

}

// call initializer and limit the number of recursions to at most 20

// bound_upd[m].init_face(l, low, high, delta, 20, tol);

bound_upd[m].init_face(l, low, high, delta, CRBC_P);

} // end loop over components

}

} // end loop over sides

Now we get and save properties such as estimated memory use, number of faces, edges, and corners, and the error estimates from the DAB updater objects.

// now get some properties from the updaters that we may be interested in

// at a later time

dab_mem_use = bound_upd[0].get_mem_usage() + bound_upd[1].get_mem_usage() \

+ bound_upd[2].get_mem_usage();

// get number of recursions and reflection coefficients

for (l = 0; l<6; ++l) {

if (procBounds[l] == crbc::BoundaryProperties::CRBC) {

DAB_props.at(l) = bound_upd[0].get_num_recursions(l);

DAB_refl.at(l) = bound_upd[0].get_reflection_coef(l);

}

}

DAB_refl[9] = dab_mem_use;

// get info about the domain configuration

DAB_props.at(6) = bound_upd[0].get_num_faces();

DAB_props.at(7) = bound_upd[0].get_num_edges();

DAB_props.at(8) = bound_upd[0].get_num_corners();

DAB_props.at(9) = bound_upd[1].get_num_faces();

DAB_props.at(10) = bound_upd[1].get_num_edges();

DAB_props.at(11) = bound_upd[1].get_num_corners();

DAB_props.at(12) = bound_upd[2].get_num_faces();

DAB_props.at(13) = bound_upd[2].get_num_edges();

DAB_props.at(14) = bound_upd[2].get_num_corners();

Finally, we call a function that figures out where we need to do message passing to complete the DAB updates.

// figure out the message passing configuration

calc_DAB_send_params();

// stop timer

t2 = MPI_Wtime();

init_dab_t += t2-t1;

Function to Update E-Fields¶

This function updates the E-field values and we note that it is identical to the serial case with the exception that we do not update any points on the boundary. This corresponds to doing the updates for a homogeneous Dirichlet boundary in the serial case, but here the boundary values are calculated on a neighboring process because we overlapped the domains or by the DAB boundary updater. We have included option OpenMP here.

void yee_updater::step_E()

{

int i,j,k;

int nxm, nym, nzm;

nxm = nx-1;

nym = ny-1;

nzm = nz-1;

double t1, t2;

// start timer

t1 = MPI_Wtime();

#if USE_OPENMP

#pragma omp parallel default(shared) private(i,j,k)

{

#endif

// compute updates to Ex

#if USE_OPENMP

#pragma omp for collapse(3)

#endif

for (k=1; k < nzm; ++k) {

for (j=1; j < nym; ++j) {

for (i=0; i < nxm; ++i) {

E[0][i + (j + k*ny)*nxm] += Ecoef * ((H[2][i + (j + k*nym)*nxm] - H[2][i + (j-1 + k*nym)*nxm]) \

- (H[1][i + (j + k*ny)*nxm] - H[1][i + (j + (k-1)*ny)*nxm]));

}

}

}

// compute updates to Ey

#if USE_OPENMP

#pragma omp for collapse(3)

#endif

for (k=1; k < nzm; ++k) {

for (j=0; j < nym; ++j) {

for (i=1; i < nxm; ++i) {

E[1][i + (j + k*nym)*nx] += Ecoef * ((H[0][i + (j + k*nym)*nx] - H[0][i + (j + (k-1)*nym)*nx]) \

- (H[2][i + (j + k*nym)*nxm] - H[2][i-1 + (j + k*nym)*nxm]));

}

}

}

// compute updates to Ez

#if USE_OPENMP

#pragma omp for collapse(3)

#endif

for (k=0; k < nzm; ++k) {

for (j=1; j < nym; ++j) {

for (i=1; i < nxm; ++i) {

E[2][i + (j + k*ny)*nx] += Ecoef * ((H[1][i + (j + k*ny)*nxm] - H[1][i-1 + (j + k*ny)*nxm]) \

- (H[0][i + (j + k*nym)*nx] - H[0][i + (j-1 + k*nym)*nx]));

}

}

}

#if USE_OPENMP

} // end parallel region

#endif

// stop timer

t2 = MPI_Wtime();

step_E_t += t2-t1;

}

Function to Compute Interior H-Field Updates¶

Again, this function is identical to the serial case except we do not update the H-fields on the boundaries because they depend on E-field values on the neighboring process.

void yee_updater::step_inner_H()

{

int i,j,k;

int nxm, nym, nzm;

nxm = nx-1;

nym = ny-1;

nzm = nz-1;

double t1, t2;

// start timer

t1 = MPI_Wtime();

#if USE_OPENMP

#pragma omp parallel default(shared) private(i,j,k)

{

#endif

// compute updates to Hx

#if USE_OPENMP

#pragma omp for collapse(3)

#endif

for (k=1; k < nzm-1; ++k) {

for (j=1; j < nym-1; ++j) {

for (i=1; i < nxm; ++i) {

H[0][i + (j + k*nym)*nx] += Hcoef * ((E[1][i + (j + (k+1)*nym)*nx] - E[1][i + (j + k*nym)*nx]) \

- (E[2][i + (j+1 + k*ny)*nx] - E[2][i + (j + k*ny)*nx]));

}

}

}

// compute updates to Hy

#if USE_OPENMP

#pragma omp for collapse(3)

#endif

for (k=1; k < nzm-1; ++k) {

for (j=1; j < nym; ++j) {

for (i=1; i < nxm-1; ++i) {

H[1][i + (j + k*ny)*nxm] += Hcoef * ((E[2][i+1 + (j + k*ny)*nx] - E[2][i + (j + k*ny)*nx]) \

- (E[0][i + (j + (k+1)*ny)*nxm] - E[0][i + (j + k*ny)*nxm]));

}

}

}

// compute updates to Hz

#if USE_OPENMP

#pragma omp for collapse(3)

#endif

for (k=1; k < nzm; ++k) {

for (j=1; j < nym-1; ++j) {

for (i=1; i < nxm-1; ++i) {

H[2][i + (j + k*nym)*nxm] += Hcoef * ((E[0][i + (j+1 + k*ny)*nxm] - E[0][i + (j + k*ny)*nxm]) \

- (E[1][i+1 + (j + k*nym)*nx] - E[1][i + (j + k*nym)*nx]));

}

}

}

#if USE_OPENMP

}

#endif

// stop timer

t2 = MPI_Wtime();

step_inner_H_t += t2-t1;

Routine to Update Boundary H-Fields¶

After we have passed the E-fields from the neighboring processes, this function computes the remaining H-field updates on the boundaries.

void yee_updater::step_outer_H()

{

int i,j,k;

int nxm, nym, nzm;

nxm = nx-1;

nym = ny-1;

nzm = nz-1;

double t1, t2;

// start timer

t1 = MPI_Wtime();

#if USE_OPENMP

#pragma omp parallel default(shared) private(i,j,k)

{

#endif

// compute updates to Hx

#if USE_OPENMP

#pragma omp for collapse(3)

#endif

for (k=0; k < nzm; ++k) {

for (j=0; j < nym; ++j) {

for (i=0; i < nx; i+=nxm) {

H[0][i + (j + k*nym)*nx] += Hcoef * ((E[1][i + (j + (k+1)*nym)*nx] - E[1][i + (j + k*nym)*nx]) \

- (E[2][i + (j+1 + k*ny)*nx] - E[2][i + (j + k*ny)*nx]));

}

}

}

#if USE_OPENMP

#pragma omp for collapse(3)

#endif

for (k=0; k < nzm; ++k) {

for (j=0; j < nym; j+=nym-1) {

for (i=1; i < nxm; ++i) {

H[0][i + (j + k*nym)*nx] += Hcoef * ((E[1][i + (j + (k+1)*nym)*nx] - E[1][i + (j + k*nym)*nx]) \

- (E[2][i + (j+1 + k*ny)*nx] - E[2][i + (j + k*ny)*nx]));

}

}

}

#if USE_OPENMP

#pragma omp for collapse(3)

#endif

for (k=0; k < nzm; k+=nzm-1) {

for (j=1; j < nym-1; ++j) {

for (i=1; i < nxm; ++i) {

H[0][i + (j + k*nym)*nx] += Hcoef * ((E[1][i + (j + (k+1)*nym)*nx] - E[1][i + (j + k*nym)*nx]) \

- (E[2][i + (j+1 + k*ny)*nx] - E[2][i + (j + k*ny)*nx]));

}

}

}

// compute updates to Hy

#if USE_OPENMP

#pragma omp for collapse(3)

#endif

for (k=0; k < nzm; ++k) {

for (j=0; j < ny; j+=nym) {

for (i=0; i < nxm; ++i) {

H[1][i + (j + k*ny)*nxm] += Hcoef * ((E[2][i+1 + (j + k*ny)*nx] - E[2][i + (j + k*ny)*nx]) \

- (E[0][i + (j + (k+1)*ny)*nxm] - E[0][i + (j + k*ny)*nxm]));

}

}

}

#if USE_OPENMP

#pragma omp for collapse(3)

#endif

for (k=0; k < nzm; k+=nzm-1) {

for (j=1; j < nym; ++j) {

for (i=0; i < nxm; ++i) {

H[1][i + (j + k*ny)*nxm] += Hcoef * ((E[2][i+1 + (j + k*ny)*nx] - E[2][i + (j + k*ny)*nx]) \

- (E[0][i + (j + (k+1)*ny)*nxm] - E[0][i + (j + k*ny)*nxm]));

}

}

}

#if USE_OPENMP

#pragma omp for collapse(3)

#endif

for (k=1; k < nzm-1; ++k) {

for (j=1; j < nym; ++j) {

for (i=0; i < nxm; i+=nxm-1) {

H[1][i + (j + k*ny)*nxm] += Hcoef * ((E[2][i+1 + (j + k*ny)*nx] - E[2][i + (j + k*ny)*nx]) \

- (E[0][i + (j + (k+1)*ny)*nxm] - E[0][i + (j + k*ny)*nxm]));

}

}

}

// compute updates to Hz

#if USE_OPENMP

#pragma omp for collapse(3)

#endif

for (k=0; k < nz; k+=nzm) {

for (j=0; j < nym; ++j) {

for (i=0; i < nxm; ++i) {

H[2][i + (j + k*nym)*nxm] += Hcoef * ((E[0][i + (j+1 + k*ny)*nxm] - E[0][i + (j + k*ny)*nxm]) \

- (E[1][i+1 + (j + k*nym)*nx] - E[1][i + (j + k*nym)*nx]));

}

}

}

#if USE_OPENMP

#pragma omp for collapse(3)

#endif

for (k=1; k < nzm; ++k) {

for (j=0; j < nym; j+=nym-1) {

for (i=0; i < nxm; ++i) {

H[2][i + (j + k*nym)*nxm] += Hcoef * ((E[0][i + (j+1 + k*ny)*nxm] - E[0][i + (j + k*ny)*nxm]) \

- (E[1][i+1 + (j + k*nym)*nx] - E[1][i + (j + k*nym)*nx]));

}

}

}

#if USE_OPENMP

#pragma omp for collapse(3)

#endif

for (k=1; k < nzm; ++k) {

for (j=1; j < nym-1; ++j) {

for (i=0; i < nxm; i+=nxm-1) {

H[2][i + (j + k*nym)*nxm] += Hcoef * ((E[0][i + (j+1 + k*ny)*nxm] - E[0][i + (j + k*ny)*nxm]) \

- (E[1][i+1 + (j + k*nym)*nx] - E[1][i + (j + k*nym)*nx]));

}

}

}

#if USE_OPENMP

}

#endif

// stop timer

t2 = MPI_Wtime();

step_outer_H_t += t2-t1;

}

Function to Update the DAB Values¶

This function uses the DAB library to compute updated values on the boundaries. This function does the same thing as the serial version on each process. In particular, we ask the boundary update for the data extents of the inputs it needs from the interior updates and then we copy those values into the DAB updater object. The only thing different from the serial case is that sometimes the DAB updater will request points that are not in the interior of the domain because we overlapped the process domains. To fix this, we simply skip any negative indexes.

void yee_updater::step_DAB()

{

int i, j, k, l, m;

int ind[3];

int nxm, nym;

int low_ind[3], high_ind[3];

double t1, t2;

// start timer

t1 = MPI_Wtime();

if (isBoundaryProc) {

// loop over the boundary faces

for (l=0; l<6; ++l) {

// check to see if the current face is of type CRBC

if (procBounds[l] == crbc::BoundaryProperties::CRBC) {

// loop over components

for (m=0; m<3; ++m) {

// get the indices the updater object expects as input from this face.

// Note that these values are inclusive

bound_upd[m].get_input_extents(l, low_ind, high_ind);

// Because we overlapped the grid the range may extend outside of the

// field arrays. To fix this, we simply change -1 -> 0 in the indexing

// if it occurs.

for (i=0; i<3; ++i)

low_ind[i] = (low_ind[i] == -1) ? 0 : low_ind[i];

// set extents for loops Ex has nx-1 in the x-direction, Ey has ny-1 in y-dir.

nxm = (m == 0) ? nx-1 : nx;

nym = (m == 1) ? ny-1 : ny;

// copy in the face values to the Ex faces

for (k=low_ind[2]; k<=high_ind[2]; ++k) {

for (j=low_ind[1]; j<=high_ind[1]; ++j) {

for (i=low_ind[0]; i<=high_ind[0]; ++i) {

ind[0] = i;

ind[1] = j;

ind[2] = k;

bound_upd[m].load_face_data(l, ind, E[m][i + (j + k*nym)*nxm]);

}

}

}

}

} // end if crbc

} // end for

// compute updates

bound_upd[0].compute_updates();

bound_upd[1].compute_updates();

bound_upd[2].compute_updates();

} // end isBoundaryProc

// stop timer

t2 = MPI_Wtime();

step_DAB_t += t2-t1;

}

Function to Copy Updated DAB Values¶

This function copies the updated values from the DAB updater to the interior of the domain. Again, this is essentially identical to the serial case. We loop over all of the updaters, ask them what the output extents are and skip any negative indices that are caused by overlapping the grids. We also skip the normal components because the values should already be correct from the Yee updates.

void yee_updater::copy_DAB()

{

int i, j, k, l, m;

int ind[3];

int nxm, nym;

int low_ind[3], high_ind[3];

double t1, t2;

// start timer

t1 = MPI_Wtime();

if (isBoundaryProc) {

// now copy the updated values from the DAB back into the fields. We only

// need to copy the tangential fields because the normal components should

// already be correct from the Yee updates.

// loop over the boundary faces

for (l=0; l<6; ++l) {

// check to see if the current face is of type CRBC

if (procBounds[l] == crbc::BoundaryProperties::CRBC) {

// loop over components

for (m=0; m<3; ++m) {

// skip normal component

if (l/2 == m)

continue;

// get the indices the updater object expects to output from this face.

// Note that these values are inclusive

bound_upd[m].get_output_extents(l, low_ind, high_ind);

// Because we overlapped the grid the range may extend outside of the

// field arrays. To fix this, we simply change -1 -> 0 in the indexing

// if it occurs.

for (i=0; i<3; ++i)

low_ind[i] = (low_ind[i] == -1) ? 0 : low_ind[i];

// set extents for loops

nxm = (m == 0) ? nx-1 : nx;

nym = (m == 1) ? ny-1 : ny;

// copy in the face values to the Ex faces

for (k=low_ind[2]; k<=high_ind[2]; ++k) {

for (j=low_ind[1]; j<=high_ind[1]; ++j) {

for (i=low_ind[0]; i<=high_ind[0]; ++i) {

ind[0] = i;

ind[1] = j;

ind[2] = k;

E[m][i + (j + k*nym)*nxm] = bound_upd[m].get_new_face_vals(l, ind);

}

}

}

}

} // end if crbc

} // end for

} // end isBoundaryProc

// stop timer

t2 = MPI_Wtime();

step_DAB_t += t2-t1;

}

Functions to Copy To and From DAB Updaters¶

The following functions are commonly used loops to copy data bewteen send and receive buffers and the DAB updaters. These differ from where we copy data to and from the solver and the DAB updater because these get data at all of the auxiliary recursion levels used in the DAB updaters.

There are two sets on loops in each function, one is for getting data from the auxiliary variables on a face and the other is for getting auxiliary data from the edge variables. The difference is that the face data has a single auxiliary index and the edge data is doubly indexed.

For simplicity, we just add the auxiliary variables to the vector by placing the data in the next available spot. We do this primarily to avoid having to do extra indexing that changes depending on the side and component. This means that we depend on these loops being executed in the same order, so it is not viable to use threading in this location.

The first function copies data from the updater object to the buffer

void yee_updater::get_dab_vals_loop(std::vector<double> &buffer,

crbc::CrbcUpdates<3, double, int> &updater,

const int &side,

const int *low,

const int *high,

const int *plow,

const int *phigh,

const bool &isedge)

{

/* DO NOT THREAD THESE LOOPS

We are depending on the order being the same

*/

int i,j,k,p,q, ind[3];

if (isedge) {

int pind[2];

for (p=plow[0]; p<=phigh[0]; ++p) {

pind[0] = p;

for (q=plow[1]; q<=phigh[1]; ++q) {

pind[1] = q;

for (k=low[2]; k<=high[2]; ++k) {

ind[2] = k;

for (j=low[1]; j<=high[1]; ++j) {

ind[1] = j;

for (i=low[0]; i<=high[0]; ++i) {

ind[0] = i;

buffer.push_back(updater.get_edge_auxiliary_vars(side, ind, pind));

} // i

} // j

} // k

} // q

} // p

} else {

for (p=plow[0]; p<=phigh[0]; ++p) {

for (k=low[2]; k<=high[2]; ++k) {

ind[2] = k;

for (j=low[1]; j<=high[1]; ++j) {

ind[1] = j;

for (i=low[0]; i<=high[0]; ++i) {

ind[0] = i;

buffer.push_back(updater.get_auxiliary_vars(side, ind, p));

} // i

} // j

} // k

} // p

}

}

The second function copies data from the buffer to the updater object

void yee_updater::set_dab_vals_loop(std::vector<double> &buffer,

crbc::CrbcUpdates<3, double, int> &updater,

int &count,

const int &side,

const int *low,

const int *high,

const int *plow,

const int *phigh,

const bool &isedge)

{

/* DO NOT THREAD THESE LOOPS

We are depending on the order being the same

*/

int i,j,k,p,q, ind[3];

if (isedge) {

int pind[2];

for (p=plow[0]; p<=phigh[0]; ++p) {

pind[0] = p;

for (q=plow[1]; q<=phigh[1]; ++q) {

pind[1] = q;

for (k=low[2]; k<=high[2]; ++k) {

ind[2] = k;

for (j=low[1]; j<=high[1]; ++j) {

ind[1] = j;

for (i=low[0]; i<=high[0]; ++i) {

ind[0] = i;

updater.set_edge_auxiliary_vars(side, ind, pind, buffer[count++]);

} // i

} // j

} // k

} // q

} // p

} else {

for (p=plow[0]; p<=phigh[0]; ++p) {

for (k=low[2]; k<=high[2]; ++k) {

ind[2] = k;

for (j=low[1]; j<=high[1]; ++j) {

ind[1] = j;

for (i=low[0]; i<=high[0]; ++i) {

ind[0] = i;

updater.set_auxiliary_vars(side, ind, p, buffer[count++]);

} // i

} // j

} // k

} // p

}

}

Function to Identify How to send DAB Auxiliary Variables¶

This function idientifies the directions and processor IDs that the current process needs to communicate DAB information.

Note this only checks the cases possible in this implementation. A more generic implementation would need to handle more cases. In particular, we can’t have 2 parallel faces that need to pass data from the same process since we are assuming each direction has the same number of processes and we do not need to pass any information if we only have 1 process.

We start by figuring out the possible directions we need to sent. We do this by

looping over all of the sides on the current process. If we find a side with the

boundary type of CRBC, we then need to check to see if the neighboring sides have

a boundary type NONE. We any sides that satisfy this check to a list.

void yee_updater::calc_DAB_send_params()

{

unsigned int l, m;

int tang_sides[4], diag_coords[3], diag_rank;

std::array<int, 2> corner_pairs;

// first identify the directions that we need to send

for (l=0; l<6; ++l) { // loop over sides

if (procBounds[l] == crbc::BoundaryProperties::CRBC) {

for (m=0; m<6; ++m) { // loop over sides

if (l/2 == m/2)

continue; // skip parallel sides

if (procBounds[m] == crbc::BoundaryProperties::NONE)

send_dirs.push_back(m);

}

}

}

Next, it is possible that our list of direction we need to send has duplicates. This can occur if there process is at the edge or corner of the domain. We simply remove any duplicates using Standard Template Library Functions that are available in C++11.

// remove any duplicates in send_dirs

std::sort(send_dirs.begin(), send_dirs.end());

auto last = std::unique(send_dirs.begin(), send_dirs.end());

send_dirs.erase(last, send_dirs.end());

After we have a list of all of the directoins that we need to send data in, we create a list of all of the adjacent sides. (It is possible to do this with clever indexing, but it is much clearer to just use a case statement).

// loop over the send directions

for (l=0; l<send_dirs.size(); ++l) {

// figure out which sides need to send in the current direction

// start by listing the tangential sides

switch (send_dirs[l] / 2) {

case 0: // outward normal is +/-x

tang_sides[0] = 2; // left y

tang_sides[1] = 3; // right y

tang_sides[2] = 4; // left z

tang_sides[3] = 5; // right z

break;

case 1: // outward normal is +/-y

tang_sides[0] = 0; // left x

tang_sides[1] = 1; // right x

tang_sides[2] = 4; // left z

tang_sides[3] = 5; // right z

break;

case 2: // outward normal is +/-z

tang_sides[0] = 0; // left x

tang_sides[1] = 1; // right x

tang_sides[2] = 2; // left y

tang_sides[3] = 3; // left z

break;

default: // shouldn't happen

for (m=0; m<4; ++m)

tang_sides[m] = -1;

std::cerr << "invalid side" << std::endl;

break;

}

For each of the sides tangent to the send directions that are a DAB/CRBC boundary, we save the side to a list. Then we convert the local side index to the MPI rank of the neighboring process in the appropriate direction.

// check to see if any of the tangetial sides are a DAB/CRBC boundary

for (m=0; m<4; ++m) {

if (procBounds[tang_sides[m]] == crbc::BoundaryProperties::CRBC) {

send_sides[l].push_back(tang_sides[m]);

}

}

}

// finally change the directions from local side indices to the MPI ranks in

// the appropriate direction

for (l=0; l<send_dirs.size(); ++l) {

send_mpi_dirs.push_back(MPI_DIR[send_dirs[l]]);

}

After we have dealt with the sides, we deal with any situations where we might need to send data diagonally. In particular, recall that we overlapped the grid by 2 points, so we have a data configuration that looks like

| |

o o o o Here, the process boundaries are represented

| | by -- or |. Grid points are represented by o, x

--x--o x--o--

--o--o o--o--

| |

x o x o

| |

Where the grid point x has the same physical coordinates but is located at

different positions on each of the four processes. We note that the DAB uses the

7-point wave stencil, so the DAB updater is not able to update any of the points

on the process boundaries. However, due to the overlap, we see that we can get

these values from a neighboring process.

We’re ordering the possible corners in a standard way because this makes it easier to keep track of things when we’re actually doing the message passing. The ordering we are using is

CRBC side = 0 or 1 (x normal), tang. sides 2, 4 (South and Down)

CRBC side = 0 or 1 (x normal), tang. sides 2, 5 (South and Up)

CRBC side = 0 or 1 (x normal), tang. sides 3, 4 (North and Down)

CRBC side = 0 or 1 (x normal), tang. sides 3, 5 (North and Up)

CRBC side = 2 or 3 (y normal), tang. sides 0, 4 (West and Down)

CRBC side = 2 or 3 (y normal), tang. sides 0, 5 (West and Up)

CRBC side = 2 or 3 (y normal), tang. sides 1, 4 (East and Down)

CRBC side = 2 or 3 (y normal), tang. sides 1, 5 (East and Up)

CRBC side = 4 or 5 (z normal), tang. sides 0, 2 (West and South)

CRBC side = 4 or 5 (z normal), tang. sides 0, 3 (West and North)

CRBC side = 4 or 5 (z normal), tang. sides 1, 2 (East and South)

CRBC side = 4 or 5 (z normal), tang. sides 1, 3 (East and North)

Again, note that this does not cover all the cases for a generic implementation because we can not, e.g., have 2 parallel CRBC sides on the same process in the current configuration.

The following code does essentially the same as above, but this time it checks

for 2 perpendicular faces with boudnary type CRBC that have a common neighbor

with the boundary type NONE. It then figures out the neighbor diagonal to this

edge and saves the MPI rank of that neighbor.

// Start by checking for perpindicular sends from the same side

for (l=0; l<6; ++l) { // loop over sides

if (procBounds[l] == crbc::BoundaryProperties::CRBC) {

switch (l / 2) {

case 0: // outward normal is +/-x

tang_sides[0] = 2; // left y

tang_sides[1] = 3; // right y

tang_sides[2] = 4; // left z

tang_sides[3] = 5; // right z

break;

case 1: // outward normal is +/-y

tang_sides[0] = 0; // left x

tang_sides[1] = 1; // right x

tang_sides[2] = 4; // left z

tang_sides[3] = 5; // right z

break;

case 2: // outward normal is +/-z

tang_sides[0] = 0; // left x

tang_sides[1] = 1; // right x

tang_sides[2] = 2; // left y

tang_sides[3] = 3; // left z

break;

default: // shouldn't happen

for (m=0; m<4; ++m)

tang_sides[m] = -1;

std::cerr << "invalid side" << std::endl;

break;

}

for (m=0; m<4; ++m) { // loop tangential sides

// this checks the pairs (0,2), (0,3), (1,2), (1,3) of tangential sides

if ((procBounds[tang_sides[m/2]] == crbc::BoundaryProperties::NONE) &&

(procBounds[tang_sides[2+(m%2)]] == crbc::BoundaryProperties::NONE)) {

corner_pairs = {tang_sides[m/2], tang_sides[2+(m%2)]};

send_corners[4*(l/2) + m] = {(int) l, tang_sides[m/2], tang_sides[2+(m%2)]};

// now figure out the mpi rank of the destination

for (int i=0; i<3; ++i)

diag_coords[i] = cart_rank[i];

// figure out the destination coords by adding +-1 to the coordinates

// of the current process in the appropriate dimensions

diag_coords[corner_pairs[0]/2] = (corner_pairs[0]%2 == 0) ? \

diag_coords[corner_pairs[0]/2]-1 : diag_coords[corner_pairs[0]/2]+1;

diag_coords[corner_pairs[1]/2] = (corner_pairs[1]%2 == 0) ? \

diag_coords[corner_pairs[1]/2]-1 : diag_coords[corner_pairs[1]/2]+1;

if (MPI_Cart_rank(grid_comm, diag_coords, &diag_rank) != MPI_SUCCESS)

std::cerr << "MPI_Cart_rank failed" << std::endl;

// save rank

corner_mpi_dirs[4*(l/2) + m].push_back(diag_rank);

}

}

}

}

Function to send DAB Values¶

This function is responsible for sending the DAB auxiliary variables to neighboring processes when needed. The most important thing to remember here is that the DAB layer is only 3-points wide in the normal direction to the boundary. The only updates that the DAB layer does that require message passing are 7-point wave equation stencil updates and these updates are only used on the middle layer of points in the DAB. Therefore, we only need to send the DAB values in this middle layer (these points correspond to the auxiliary variables that are located at the output location of the DAB).

The idea is on each process, we will loop over all of the directions we need to send data in.

void yee_updater::send_DAB()

{

unsigned int i,l,m, side, sidea, sideb;

int edge;

int low[3], high[3], plow[2], phigh[2];

double t1, t2;

// start timer

t1 = MPI_Wtime();

if (isBoundaryProc) {

// loop over the directions we need to send

for (l=0; l<send_dirs.size(); ++l) {

Next, we delete any data left over from the previous time step in the send and receive buffers for the current side. Then we loop over all of the boundary sides that need to send data in the current direction and also loop over all three boundary updater components.

side = send_dirs[l];

// clear the buffers for this direction

DAB_sbuf[side].clear();

DAB_rbuf[side].clear();

// loop over the sides we need to send in the current direction

for (m=0; m<send_sides[l].size(); ++m) {

// loop over the components

for (i=0; i<3; ++i) {

sidea = send_sides[l].at(m);

Then, we get the spatial indexing for the middle layer of auxiliary variables by asking the DAB updater objects for the output extents.

// get data extents for current component

// This gets us the indices for all the points on the plane

// parallel to the phyiscal boundary

bound_upd[i].get_output_extents(sidea, low, high);

The ouput extents covers the entire plane of points parallel to the DAB boundary, but

we only need to send the second to last line of points parallel to the neigboring process

where we are sending the data. We can get the component of the indices we need to

change using side / 2 (here 0 corresponds to sending in the x-direction, 1

corresponds to sending the y-direction, and 2 corresponds to sending in the z-direction).

Then we can determine if we are sending “right” or “left” by using side % 2.

We use this information to modify the data extents: if we send left, we add 1 to

the low extent to start at the 2nd line of points and set the high extent to this

new low extent so we stop after this line. Similarly, if we send right, we subtract

1 from the high extent to finish at the 2nd last line of points and set the low extent

to this new high extent so we also start at the 2nd to last line of points.

// now we need to restrict to second to last line of points parallel to

// the boundary we are sending. side / 2 gives us the component of the

// extents we need to modify and side % 2 tells us if we need to modify

// the low or high extents. Here, we also adjust for the overlap

// based on the component

if (side % 2 == 0) { // left side in the appropriate direction

high[side / 2] = (side / 2 == i) ? ++low[side / 2] : ++low[side / 2];

} else { // right side in the appropriate direction

low[side / 2] = (side / 2 == i) ? --high[side / 2] : --high[side / 2];

}

Finally, we copy the DAB auxiliary variables into the send buffer

// copy data auxilliary data into the send buffer

plow[0] = 0; // auxilliary index bounds

phigh[0] = bound_upd[i].get_num_recursions(sidea);

get_dab_vals_loop(DAB_sbuf[side], bound_upd[i], sidea, low, high, plow, phigh);

} // loop over components

} // end loop over sides in the current direction

Next we do the exact same thing for any edges that we need to send. The only difference here is the boundary updater now returns a line of points when we ask for the output extents and we need to restrict this list to the 2nd to last point on the line. We do this in the same way that we restricted the plane to a line for the face data above and then we copy the auxiliary values into the send buffer.

// now send any edge data

if (send_sides[l].size() == 2) {

// loop over the components

for (i=0; i<3; ++i) {